High-Level Architecture of The Bommber

Published on September 7, 2025

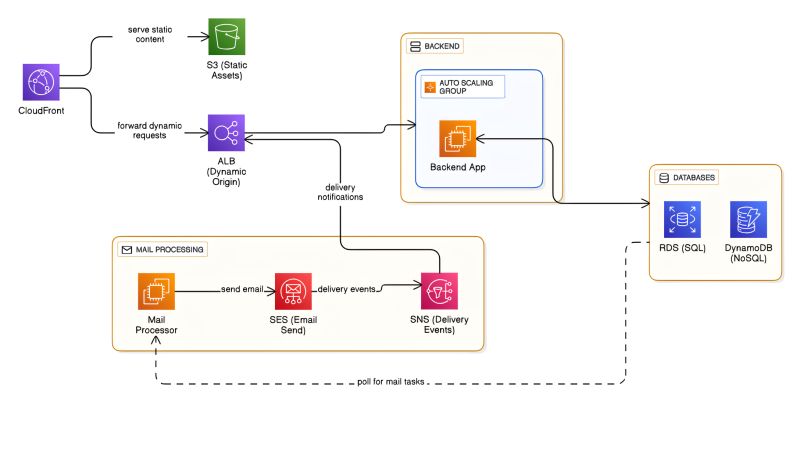

High-Level Architecture of The Bommber

When I first started designing The Bommber, an interactive email marketing platform, the goal was clear: build something fast, scalable, and reliable without overcomplicating the stack. Although the project has since been closed, the architectural journey was a great learning experience worth sharing.

1. CloudFront + S3 + ALB (Static vs. Dynamic Separation)

The frontend layer was designed to optimize performance:

Amazon CloudFront (CDN): Used as the entry point for all traffic. It helped reduce latency through global edge caching.

Two Origins:

* S3 (Static Assets):Served JavaScript, CSS, images, and AMP-compatible email templates directly from an S3 bucket.

* ALB (Application Load Balancer): Forwarded dynamic requests to the backend app running on EC2.

Why this worked: By splitting static and dynamic requests, we minimized backend load and boosted response times significantly.

2. Backend (Monolithic, Auto-Scaled)

Auto Scaling Group of EC2 instances ran a monolithic backend app. The decision to stick with a monolith instead of microservices was intentional. It reduced the time to market, simplified development, and allowed us to iterate faster. Auto Scaling ensured that variable loads (like campaign launches or peak sending times) were handled smoothly.

Why this worked: Simple deployment, less moving parts, and elastic scalability.

3. Databases (Hybrid SQL + NoSQL)

Choosing the right data store was critical since The Bommber had structured and unstructured data needs:

* Amazon RDS (SQL): Stored structured entities like users, accounts, billing, and campaign configurations.

* Amazon DynamoDB (NoSQL): Managed unstructured quiz data and dynamic content linked to campaigns.

Why this worked: This hybrid approach gave us both transactional reliability (RDS) and flexibility at scale (DynamoDB).

4. Mail Workflow (Asynchronous & Reliable)

The heart of The Bommber was email delivery.

1. Mail Processor: Polled the database for pending mail tasks.

2. Amazon SES (Simple Email Service): Sent the actual emails.

3. SNS (Delivery Events): SES published delivery events (success, bounce, complaint).

4. SQS Queue: Captured these events for durability.

5. Backend Consumer: Picked up events and updated the mail status in the database.

Why this worked:

* Separation of concerns: The sending unit only focused on sending emails, while delivery status updates were fully decoupled.

* Reliability: Even if the backend was briefly unavailable, SQS ensured no delivery event was lost.

Why This Architecture Worked

1. Performance: CloudFront caching reduced latency worldwide.

2. Scalability: Auto Scaling handled spikes automatically.

3. Flexibility: SQL + NoSQL balanced transactional and unstructured data needs.

4. Reliability: Asynchronous delivery tracking ensured accurate email status updates.

5. Speed to Market: A monolith kept development and deployment simple.

Reflection & Improvements

If I revisited this design today, I would enhance it with:

1. Containers (ECS/EKS): Easier deployment and scaling over raw EC2 instances.

2. Redis or ElastiCache: Faster caching for session data and repeated queries.

3. CI/CD Pipelines: Automated deployments for safer and faster iteration.

4. Modular Monolith: A structured monolith that eases eventual migration to microservices if needed.

Closing Thoughts

Even though The Bommber project ended, designing this architecture gave me hands-on experience in building a scalable, performant, and resilient cloud-native platform. Sometimes, the best architectures are the ones that balance speed of delivery with technical soundness, rather than chasing trends prematurely.